Training serving skew ranks as the most common problem while the deployment of ML models.

Let’s find out what the concept is and what are the prevention operations for it?

Training serving skew

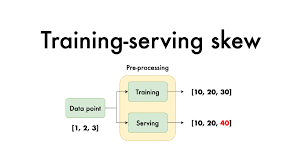

The training-serving skew is a difference in performance during training and serving.

The skew can be instigated by an inconsistency amid how you tackle data in training and how you do it in serving pipelines. It poses the change in data while you train and when you serve.

Common Machine Learning Operations

For training an ML model, one follows the same series of steps, which include:

- Fetching data

- Cleaning data by fixing or discarding tainted interpretations

- Generation of the features

- Training model

- Evaluating model

After cleaning the data, transformations are applied to it, to make learning problems a bit easier.

When it comes to feature engineering, it is an important task while using tabular data with conventional Machine learning models – which is common in industry. The exception is the deep learning models, in which hardly any feature engineering involved.

In deployment of a model, pipelines are comparable, but while making predictions with a formerly trained model after feature computation. Still, not every deployment is equal; two settings are:

- Batch – weekly predictions for every user – and uploading these predictions to the database.

- Online – exposing the model as REST API for making at request predictions.

Feature Engineering what is it about?

It is a collection of independent transformations which operates on a group or single observation(s).

Practically, none of the data from training set becomes part of transformation.

However, this diverges with pre-processing approaches like feature scaling, in which data from training set is utilized as a measure of transformation.

Pre-processing approaches are not considered as feature engineering, however, these are a component of the model as the standard deviation and mean are taken up from a training set itself and then are made functional to the test set validation.

Training Serving Skew – Explained

Normally we should be re-using the feature engineering code for making sure that the raw data precisely maps to the equivalent feature vector – during training and serving instances. However, if this is not the case, then have training serving skews for the rescue.

One basic motive for this is a disparity of computing resources.

When working on a new machine learning project and writing a pipeline, months later, you get first of many version ready to deploy as micro-service. It is quite inefficient for needing the micro-service to connect for making a prediction. Thus your plan is to again implement the feature code using NumPy/pandas.

Now, you are left with two codebases for maintenance – yeah, too much to handle.

This still is a small issue since working with same resources at both serving/training instances – but remember to use a training stack whenever possible- one that can be utilized on serving instances also.

Now if you can’t re-use the feature engineering code; it is advised to test training serving skew before deployment of a fresh model. For doing so, pass raw information through the feature pipelines and compare output – the raw vectors should now map same output feature vector.

What is training skew caused by?

It is a difference between the model performances during training and during serving.

This skew can be instigated by a difference in how you tackle data in both serving and training pipelines.

Mathematically, we can define the feature engineering process as a function transforming raw input into another vector used to train the model.

What are the solutions for dealing with training-serving skew?

We will discuss two solutions here:

Workflow Incremental Manager

Development of features is an iterative process – accelerating it with incremental forms helps with tracking of source code fluctuations and helps skip the redundant work.

For instance, when you have 10 independent features – when you adjust one feature, you can easily skip the other as they will generate indistinguishable results to their previous executions.

These are frameworks allowing us to describe the computation graphs like the feature codes. From plenty of options to choose from, only a few support an incremental build.

Feature Stores

There is another solution, though, that is not as invasive as the others – using feature stores.

A feature store is a peripheral structure that computes, and you only have for fetching ones you need for model training and serving.

For more information on the feature store and working and solution of training-serving skew, head to Qwak today!

Plagiarism Report Copyscape